In a nutshell:

EMO 2025 will highlight turnkey cells that pair machine tools with cobots/AMRs and embedded AI vision. The technical edge is reliable, low-latency inference in 5–10 W envelopes, robust illumination, and safe integration with CNC/PLC.

Components that matter: global-shutter cameras, controlled lighting, deterministic triggers/encoders, compact NPUs/SoCs/FPGAs, and safety-rated scanners for mobile/robotic motion.

Success depends on disciplined optics/lighting, dataset design, on-device MLOps, and compliance with robot/AMR safety standards.

With labor tight and quality demands unrelenting, manufacturers are now automating the handoffs around machine tools as much as the cuts themselves. The recipe powering the newest cells is clear: collaborative robots and mobile platforms to move parts, and edge AI vision to decide, in milliseconds, what to pick, where to place and whether a surface is good enough. The technology stack is no longer experimental. What matters now is making it predictable, safe and maintainable on the shop floor. EMO 2025 in Hannover will showcase just that, with turnkey tending cells, inline inspection stations and autonomous mobile robots weaving between booths.

The “edge AI” claim used to be marketing shorthand; in 2025 it denotes clear design targets. On-camera or near-sensor compute is expected to deliver low-latency inference in 5–10 W thermal envelopes, without hitching to a cloud. That drives component choices: global-shutter cameras with deterministic triggers for motion-synchronized capture, controlled lighting with microsecond strobe rise times and overdrive capability to freeze motion, and compact NPUs, SoCs or small FPGAs that run quantized CNNs and classic vision side by side. Latency budgets are engineered backwards from the cell: a typical end-to-end window—from exposure to robot actuation—lands between 50 and 150 ms, with capture in single-digit milliseconds, inference in tens of milliseconds and handshakes taking the balance. The key is not just the average but the worst-case under load, and systems must include back-pressure strategies to avoid creeping queues when throughput spikes.

Optics and lighting make or break these deployments. Lens resolution must match sensor pixel size and the smallest defect of interest; depth of field must suit the working distance and part tolerances; and polarization and wavelength choices should suppress glare from coolants and machined surfaces. Telecentric lenses and stable mounts are finding their way into more cells as metrology-grade measurements move closer to the tool. Strobe controllers allow very short exposures—20 to 100 microseconds—to eliminate blur when parts are moving, but their thermal limits must be respected. Encoders or photogates tied into opto-isolated I/O provide consistent triggers, and multi-camera systems benefit from PTP-based time synchronization to keep frames aligned.

Safety boundaries govern how close robots and people can work. Collaborative modes are defined and validated under ISO 10218 and ISO/TS 15066, which set limits on power, force and speed and define speed and separation monitoring. Autonomous mobile robots, increasingly used to ferry trays to and from machines, operate under ISO 3691‑4; safety laser scanners with PL d/SIL 2 performance and certified speed control functions are the norm. Crucially, vision used for product inspection is not a safety function. Unless a device is explicitly certified for protective use, AI models must not be relied upon for personnel safety; they can inform, but they cannot interlock. That separation keeps deployments compliant and reduces the risk of false assumptions.

Modeling and maintenance are where many projects stumble. Datasets need to reflect the factory’s reality, not the lab’s: include glare, dust, coolant films, and the full range of acceptable variation. Domain randomization helps, but nothing replaces representative captures across shifts, fixtures and lot changes. Version datasets and models, track confusion matrices in production, and A/B test updates before promotion. Shops adopting on-device MLOps are seeing benefits from signed model packages, OTA updates with rollback and audit trails that tie model versions to quality outcomes. Combined with secure boot, SBOMs and TLS-protected communications, these measures align with IEC 62443 principles and make IT/OT sign-off smoother.

Visitors to EMO 2025 will see fewer isolated robot arms and more complete cells: cobots tending CNCs with vision-guided picking, inline stations classifying surfaces into go/no-go bins, and AMRs docking at machines to swap pallets autonomously. Expect to see vendors demonstrate measured timing—from trigger to robot move—under live conditions, show lighting fixtures that hold contrast under ambient changes, and reveal how their systems integrate with CNCs and PLCs over industrial Ethernet or OPC UA. The most credible exhibits will put up production-like metrics: precision/recall for defect classes at booth lighting, P95 and P99 end-to-end latencies, and docking success rates.

For buyers, the evaluation is practical. Demand timing diagrams and worst-case latency measurements, not just average FPS. Ask to see how the system behaves when frames drop or compute throttles. Review lens and lighting selections against your parts, not generic examples. Confirm that robots and AMRs carry current certifications and that stopping distances and safety zones have been calculated for the demonstrated paths. And insist on lifecycle evidence: long-term availability of compute modules, stable toolchains and kernels, secure update processes, and support SLAs that match production needs.

Zero-touch automation is not about removing humans; it’s about removing friction and variability from the hours machines spend waiting for the next part or an inspection verdict. The technology is ready when it’s engineered with discipline. EMO 2025 will make that case on the floor. The shops that leave with a plan to standardize optics, enforce dataset governance and lock timing budgets will be the ones that translate demos into dependable output.

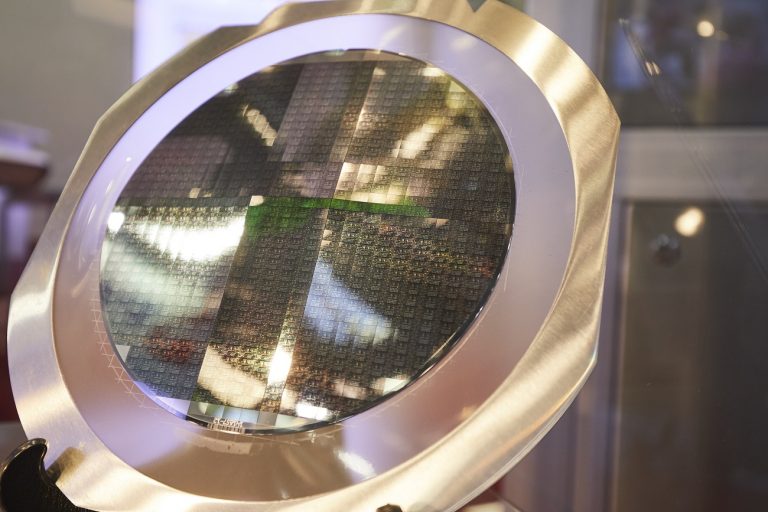

Title photo by Youn Seung Jin

< Back to Automation, AI, Robotics

< Back to Electronics, Components

< Back to Optics, Photonics, Imaging

> Here is one of the places where the issuer of a news item is branded.

> Tap buttons or logos to be redirected to the issuers profiles or pages.